AI and data platforms are revolutionizing the way businesses operate, unlocking unprecedented insights from data and driving innovation across industries. These platforms are transforming how we understand customer behavior, optimize operations, and develop new products and services.

At the heart of these platforms lies a powerful combination of data storage, processing, and analysis capabilities. Advanced machine learning algorithms and models are trained on vast datasets, enabling these platforms to extract valuable insights, predict future trends, and automate complex tasks. From personalized recommendations in e-commerce to real-time fraud detection in finance, AI and data platforms are reshaping the business landscape.

The Power of AI and Data Platforms

The convergence of artificial intelligence (AI) and data platforms is revolutionizing industries, empowering businesses to extract unprecedented value from their data. These platforms provide the tools and infrastructure to collect, analyze, and interpret massive datasets, unlocking insights that drive innovation, optimize operations, and enhance customer experiences.

The Transformative Potential of AI and Data Platforms

AI and data platforms are transforming industries by automating tasks, improving decision-making, and creating new products and services. These platforms leverage machine learning algorithms to identify patterns, predict outcomes, and automate processes, leading to significant efficiency gains and competitive advantages.

- Healthcare: AI-powered platforms analyze medical records, predict disease outbreaks, and personalize treatment plans, leading to more accurate diagnoses, improved patient outcomes, and reduced healthcare costs. For example, the Mayo Clinic uses AI to analyze patient data and identify high-risk individuals for early intervention, leading to improved patient outcomes.

- Finance: AI platforms are used for fraud detection, risk assessment, and algorithmic trading, enhancing financial security and profitability. For instance, JPMorgan Chase uses AI to analyze millions of financial transactions, identifying potential fraud and reducing financial losses.

- Manufacturing: AI-powered platforms optimize production processes, predict equipment failures, and personalize product offerings, leading to increased efficiency, reduced downtime, and enhanced customer satisfaction. For example, General Electric uses AI to monitor and predict equipment failures in its jet engines, reducing maintenance costs and improving operational efficiency.

- Retail: AI platforms personalize shopping experiences, optimize inventory management, and predict customer behavior, leading to increased sales, improved customer satisfaction, and reduced inventory costs. For example, Amazon uses AI to personalize product recommendations and optimize its supply chain, resulting in a seamless shopping experience for customers.

Core Components of AI and Data Platforms

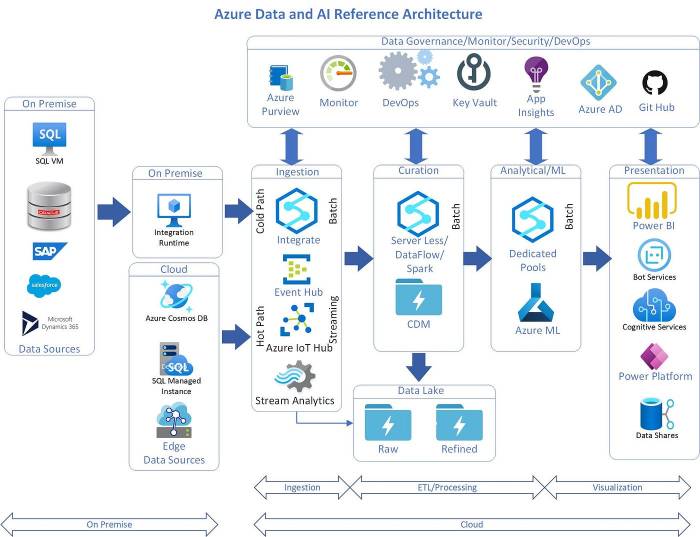

A robust AI and data platform is built upon a foundation of interconnected components that work together to collect, store, process, analyze, and deliver actionable insights from data. These platforms are designed to handle the complexities of modern data landscapes, enabling organizations to harness the power of AI for various applications.

Data Storage

Data storage forms the bedrock of any AI and data platform. It involves the collection, organization, and preservation of raw data from diverse sources. Efficient data storage is crucial for ensuring data integrity, accessibility, and scalability.

- Data Lakes: Data lakes are centralized repositories that store vast amounts of raw data in its native format, regardless of structure or type. This allows for flexibility and future analysis, as data is not immediately transformed or structured.

- Data Warehouses: Data warehouses are designed for structured data, often used for analytical purposes. Data is typically cleaned, transformed, and organized for efficient querying and reporting.

- NoSQL Databases: NoSQL databases are designed for unstructured or semi-structured data, offering flexibility and scalability for handling large volumes of data with varying structures. These are often used for storing data from social media, sensor readings, and other sources that don’t fit traditional relational database models.

Data Processing

Data processing involves transforming raw data into a format suitable for analysis and model training. This stage is crucial for cleaning, enriching, and preparing data for meaningful insights.

- Data Cleaning: This involves identifying and removing inconsistencies, errors, and duplicates from data. This ensures data quality and improves the accuracy of subsequent analyses.

- Data Transformation: This involves converting data into a format compatible with the chosen analytical tools or machine learning models. This might include tasks like data aggregation, normalization, and feature engineering.

- Data Integration: This involves combining data from multiple sources to create a unified view. This allows for a holistic understanding of data and enables cross-source analysis.

Data Analysis

Data analysis focuses on extracting meaningful insights from processed data. This involves applying statistical techniques, data visualization tools, and machine learning algorithms to uncover patterns, trends, and relationships within the data.

- Descriptive Analytics: This focuses on summarizing past data to understand what has happened. It uses techniques like dashboards, reports, and visualizations to provide insights into historical trends and patterns.

- Predictive Analytics: This involves building models to predict future outcomes based on historical data. It uses techniques like regression analysis, time series forecasting, and classification models to forecast future events and trends.

- Prescriptive Analytics: This focuses on recommending actions based on data analysis. It uses techniques like optimization algorithms and decision trees to suggest optimal courses of action based on data-driven insights.

Machine Learning Algorithms and Models

Machine learning algorithms are the core of AI-powered insights. These algorithms learn from data to identify patterns, make predictions, and automate tasks. The choice of algorithm depends on the specific task and the nature of the data.

- Supervised Learning: Algorithms learn from labeled data to predict outcomes based on input features. Examples include regression models for predicting continuous values and classification models for predicting categorical outcomes.

- Unsupervised Learning: Algorithms discover patterns and relationships in unlabeled data. Examples include clustering algorithms for grouping similar data points and dimensionality reduction techniques for simplifying complex data.

- Reinforcement Learning: Algorithms learn through trial and error by interacting with an environment and receiving feedback. This is often used for tasks like game playing, robotics, and autonomous systems.

Data Acquisition and Integration

The foundation of any AI and data platform lies in the ability to gather and integrate data from diverse sources. This process involves extracting, transforming, and loading (ETL) data into a centralized repository, where it can be processed, analyzed, and utilized for AI model training and inference.

Data Acquisition Strategies

Acquiring data from various sources is the first step in building a robust AI and data platform. Data acquisition strategies involve identifying relevant data sources, establishing data access mechanisms, and ensuring data quality.

Here are some common data acquisition strategies:

- Direct Data Extraction: This method involves directly extracting data from its source using APIs, web scraping, or other data extraction tools. For example, extracting customer data from a CRM system or financial data from a stock exchange website.

- Data Streaming: In real-time scenarios, data streaming techniques allow for continuous data acquisition from various sources. Examples include collecting sensor data from IoT devices or monitoring website traffic.

- Data Warehousing: This approach involves collecting and storing large volumes of historical data in a centralized data warehouse. This data can be used for historical analysis, trend identification, and model training.

- Data Lakes: Data lakes are repositories that store data in its raw format, regardless of structure or schema. This allows for greater flexibility and scalability, enabling the storage of diverse data types.

Data Integration Challenges

Integrating data from multiple sources presents various challenges, including:

- Data Quality: Data quality is paramount for accurate AI model training and analysis. Ensuring data accuracy, completeness, and consistency is crucial.

- Data Consistency: Data from different sources may use different formats, units, and naming conventions. Integrating such data requires harmonizing these inconsistencies.

- Data Scalability: As the volume and variety of data grow, ensuring scalability in data integration becomes critical. Efficient data pipelines and distributed processing architectures are essential.

Data Integration Strategies and Tools

Effective data integration strategies are essential for overcoming these challenges. Some common strategies and tools include:

- ETL (Extract, Transform, Load): ETL pipelines automate the process of extracting data from sources, transforming it into a consistent format, and loading it into a target system. Tools like Informatica PowerCenter, Talend, and Apache NiFi are widely used for ETL processes.

- Data Virtualization: This approach creates a unified view of data without physically moving it. It allows access to data from various sources through a single interface. Tools like Denodo and IBM DataStage provide data virtualization capabilities.

- Data Federation: Data federation allows querying data from multiple sources as if they were a single database. It eliminates the need for data replication and provides a unified view of data across sources. Tools like Oracle Data Federation and Microsoft SQL Server Federation support data federation.

- Data Pipelines: Data pipelines automate the flow of data from source to destination, including transformation and processing steps. Tools like Apache Kafka, Apache Spark, and AWS Kinesis provide data pipeline capabilities.

Data Preprocessing and Feature Engineering

Data preprocessing and feature engineering are crucial steps in the AI model development process. They transform raw data into a format suitable for training AI models, improving their accuracy and performance.

Data Cleaning

Data cleaning involves identifying and correcting errors, inconsistencies, and missing values in the dataset. This process ensures data quality and prevents the model from learning from erroneous information.

- Missing Value Imputation: Replacing missing values with estimated values based on other data points or statistical methods.

- Outlier Detection and Handling: Identifying and removing or adjusting extreme values that deviate significantly from the rest of the data.

- Data Consistency Checks: Ensuring that data conforms to predefined rules and standards, such as data type, format, and range.

Data Transformation

Data transformation involves converting data into a format suitable for AI model training. This step often involves scaling, normalization, and encoding.

- Scaling and Normalization: Transforming data to a common scale, such as between 0 and 1, to prevent features with larger scales from dominating the model.

- Encoding Categorical Features: Converting categorical variables (e.g., gender, city) into numerical values that AI models can understand. Common techniques include one-hot encoding and label encoding.

Feature Engineering

Feature engineering involves creating new features from existing ones or selecting relevant features for the AI model. This step can significantly impact model performance.

- Feature Selection: Identifying and selecting the most relevant features for the model based on their correlation with the target variable.

- Feature Extraction: Deriving new features from existing ones using domain knowledge or statistical methods.

- Feature Interaction: Combining existing features to create new ones that capture complex relationships between variables.

Impact on AI Model Performance

Effective data preprocessing and feature engineering can significantly enhance the accuracy and performance of AI models.

- Improved Accuracy: By removing noise and inconsistencies, data preprocessing ensures that the model learns from reliable information, leading to higher accuracy.

- Faster Training: Data transformation and feature engineering can reduce the dimensionality of the data, making it easier for the model to learn and train faster.

- Enhanced Generalization: Feature engineering can help the model generalize better to unseen data by capturing important patterns and relationships.

Machine Learning Model Training and Evaluation

Once data is preprocessed and ready, the next step is to train a machine learning model. This involves using the prepared data to teach the model to identify patterns and make predictions. The process of training and evaluating machine learning models is crucial for developing accurate and reliable AI applications.

Training Machine Learning Models

Training machine learning models involves feeding the model with labeled data, where each data point has a corresponding output or label. The model then learns to map inputs to outputs by adjusting its internal parameters. The goal of training is to minimize the difference between the model’s predictions and the actual labels, a process known as optimization.

- Supervised Learning: This approach involves training a model on labeled data, where the model learns to predict a specific output based on given inputs. For example, training a model to classify images of cats and dogs based on labeled images. Common supervised learning algorithms include linear regression, logistic regression, support vector machines, and decision trees.

- Unsupervised Learning: This approach involves training a model on unlabeled data, where the model learns to identify patterns and structures in the data without explicit labels. For example, clustering customers into different segments based on their purchasing behavior. Common unsupervised learning algorithms include k-means clustering, principal component analysis (PCA), and hierarchical clustering.

- Reinforcement Learning: This approach involves training a model to learn through trial and error, where the model receives rewards for making correct predictions and penalties for making incorrect predictions. For example, training a model to play a game by rewarding it for winning and penalizing it for losing. Common reinforcement learning algorithms include Q-learning and deep reinforcement learning.

Model Evaluation

After training, it’s crucial to evaluate the model’s performance to determine its accuracy and effectiveness. This involves testing the model on a separate dataset (not used for training) to assess its ability to generalize to new data.

- Accuracy: This metric measures the percentage of correct predictions made by the model. It’s a common metric for classification tasks, but it may not be the most informative in all cases, especially when dealing with imbalanced datasets.

- Precision: This metric measures the proportion of positive predictions that are actually correct. It’s useful for scenarios where false positives are costly, such as in medical diagnosis.

- Recall: This metric measures the proportion of actual positive cases that are correctly identified. It’s important for scenarios where false negatives are costly, such as in fraud detection.

- F1-Score: This metric combines precision and recall into a single score, providing a balanced measure of model performance. It’s often used when both false positives and false negatives are undesirable.

- AUC (Area Under the Curve): This metric is used for evaluating binary classification models. It measures the area under the receiver operating characteristic (ROC) curve, which plots the true positive rate against the false positive rate for different classification thresholds.

Model Selection

Based on the evaluation results, the most appropriate model for a given task is selected. This involves considering factors such as the model’s accuracy, complexity, and computational cost. For example, a simple model may be preferred if the task requires fast predictions, while a more complex model may be necessary for achieving higher accuracy.

“The best model is the one that performs best on the task at hand, taking into account factors such as accuracy, complexity, and computational cost.”

Model Deployment and Monitoring

The journey of an AI model doesn’t end with training. It’s crucial to deploy these models into production environments to leverage their predictive power and solve real-world problems. However, deployment is just the first step. Continuous monitoring and model retraining are essential to maintain optimal performance and ensure the model remains effective over time.

Deployment Strategies

Deploying a trained AI model involves moving it from the development environment to a production environment where it can interact with real-time data and generate predictions. This process typically involves several steps:

- Model Packaging and Serialization: The trained model is packaged into a format suitable for deployment, often as a serialized file or a container image. This allows the model to be easily transferred and loaded into the production environment.

- Infrastructure Setup: A suitable infrastructure is set up to host the deployed model. This could involve cloud services, on-premises servers, or a combination of both. The infrastructure should be scalable and reliable to handle the volume and frequency of requests the model will receive.

- API Integration: An API is created to allow other applications or systems to interact with the deployed model. This API provides a standardized interface for sending data to the model and receiving predictions.

- Deployment and Monitoring Tools: Tools and frameworks are used to automate the deployment process, monitor model performance, and manage updates. These tools can help streamline the deployment process and ensure that the model is always operating at its best.

Continuous Monitoring and Retraining

Once a model is deployed, it’s essential to continuously monitor its performance and identify potential issues. Monitoring helps ensure that the model remains accurate and effective over time.

- Performance Metrics: Key performance metrics are tracked to assess the model’s accuracy, precision, recall, and other relevant indicators. These metrics are compared to baseline values to identify any significant changes or deviations.

- Data Drift Detection: Data drift occurs when the distribution of data used for training the model changes over time. This can lead to a decline in model performance. Monitoring tools can detect data drift and trigger model retraining to adapt to the changing data patterns.

- Model Retraining: When data drift is detected or performance degrades, the model needs to be retrained using updated data. This process involves using new data to adjust the model’s parameters and improve its accuracy.

Managing Model Drift

Model drift is a common challenge in AI applications. Strategies for managing model drift include:

- Regular Retraining: Retrain the model regularly using updated data to adapt to changing patterns. The frequency of retraining depends on the rate of data drift and the criticality of the application.

- Ensemble Methods: Combine multiple models trained on different datasets or using different algorithms to improve robustness and reduce the impact of drift.

- Adaptive Learning: Implement algorithms that can automatically adjust the model’s parameters based on new data without requiring explicit retraining.

Data Security and Privacy

The rise of AI and data platforms has brought unprecedented advancements in various fields, but it also raises critical concerns about data security and privacy. Protecting sensitive information and ensuring responsible data usage is paramount to building trust and ethical AI systems.

Data Encryption

Data encryption is a fundamental security measure that transforms data into an unreadable format, making it incomprehensible to unauthorized individuals. This helps protect sensitive information during storage, transmission, and processing.

- Symmetric Encryption: Uses the same key for both encryption and decryption. It is generally faster but requires secure key management. Examples include AES (Advanced Encryption Standard) and DES (Data Encryption Standard).

- Asymmetric Encryption: Uses separate keys for encryption and decryption, known as public and private keys. It provides more secure key management but is computationally more expensive. Examples include RSA (Rivest-Shamir-Adleman) and ECC (Elliptic Curve Cryptography).

Access Control

Access control mechanisms restrict unauthorized access to sensitive data, ensuring that only authorized individuals can view, modify, or delete information.

- Role-Based Access Control (RBAC): Assigns users to roles with specific permissions based on their job functions. For instance, data scientists might have read and write access to training data, while engineers might only have read access.

- Attribute-Based Access Control (ABAC): Allows for more granular access control based on various attributes, such as user identity, location, device, or time of access. This provides flexibility in defining access policies based on specific conditions.

Compliance with Regulations

AI and data platforms must comply with relevant data privacy regulations, such as GDPR (General Data Protection Regulation) in Europe and CCPA (California Consumer Privacy Act) in the United States. These regulations define guidelines for data collection, storage, processing, and sharing.

- Data Minimization: Only collect and process data that is strictly necessary for the intended purpose. This reduces the risk of unauthorized access and misuse.

- Data Retention: Establish clear policies for data retention, ensuring that data is deleted or anonymized when no longer required.

- Transparency and Accountability: Provide clear information to users about how their data is collected, used, and shared. Implement mechanisms for data subject rights, such as the right to access, rectify, erase, and restrict processing.

Ethical Considerations

Beyond legal compliance, ethical considerations play a crucial role in responsible data usage.

- Fairness and Bias: AI models should be developed and deployed in a fair and unbiased manner. This requires addressing potential biases in training data and model design to avoid discriminatory outcomes.

- Privacy by Design: Incorporate privacy considerations throughout the entire AI development lifecycle, from data collection to model deployment. This ensures that privacy is built into the system from the ground up.

- Transparency and Explainability: AI models should be transparent and explainable, allowing users to understand how decisions are made and why. This promotes trust and accountability.

Applications of AI and Data Platforms

AI and data platforms are not merely technological marvels; they are powerful tools transforming industries across the globe. These platforms are revolutionizing how businesses operate, solve complex problems, and deliver value to their customers.

Industry Applications

AI and data platforms have a wide range of applications across various industries, enabling organizations to leverage data-driven insights for improved decision-making and enhanced performance.

| Industry | Applications |

|---|---|

| Healthcare | AI-powered diagnostic tools, personalized treatment plans, drug discovery, medical imaging analysis, patient monitoring, and predictive analytics for disease outbreaks. |

| Finance | Fraud detection, risk assessment, credit scoring, algorithmic trading, personalized financial advice, and customer churn prediction. |

| Retail | Personalized recommendations, targeted marketing campaigns, inventory management, demand forecasting, customer segmentation, and fraud detection. |

| Manufacturing | Predictive maintenance, quality control, production optimization, supply chain management, and automated process control. |

The Future of AI and Data Platforms

The field of AI and data platforms is rapidly evolving, driven by advancements in computing power, data availability, and algorithm development. These advancements are poised to reshape industries and transform society in profound ways. This section explores emerging trends and their potential impact, offering insights into the future of data-driven decision-making and the role of AI in shaping our world.

Advancements in AI and Data Platform Technologies

The future of AI and data platforms is characterized by several key advancements. These advancements are not isolated but are interconnected, reinforcing each other and driving further innovation.

- Edge Computing and the Internet of Things (IoT): The rise of edge computing and the Internet of Things (IoT) is expanding the reach of AI and data platforms beyond centralized data centers. This enables real-time data processing and analysis at the edge, closer to where data is generated. For example, autonomous vehicles can leverage edge computing to process sensor data and make real-time decisions, without relying on a centralized cloud infrastructure.

- Quantum Computing: Quantum computing holds the potential to revolutionize AI and data platform capabilities. Its ability to process information exponentially faster than traditional computers could enable the development of more complex AI models and the analysis of massive datasets. This could lead to breakthroughs in drug discovery, materials science, and financial modeling.

- Explainable AI (XAI): As AI models become more complex, understanding their decision-making processes becomes increasingly important. Explainable AI (XAI) focuses on developing AI systems that can provide transparent and understandable explanations for their predictions. This is crucial for building trust and ensuring responsible AI deployment, particularly in sensitive areas like healthcare and finance.

- Generative AI: Generative AI models, like large language models (LLMs), are capable of creating new content, such as text, images, and code. These models are finding applications in areas like content creation, design, and software development. The ability to generate high-quality content automatically has the potential to significantly impact various industries.

Wrap-Up

As AI and data platforms continue to evolve, their impact on our world will only grow. With the ability to analyze massive amounts of data and generate actionable insights, these platforms are poised to drive innovation, improve decision-making, and shape the future of industries across the globe. The potential is immense, and the journey of AI and data platforms is one that promises to transform the way we live, work, and interact with the world around us.